This lesson continues with the second workshop on reproducible science, focusing on additional open source tools for researchers and data scientists, such as the R programming language for data science, as well as associated tools like RStudio and R Markdown. Additionally, users are introduced to Python and iPython notebooks, Google Colab, and are given hands-on tutorials on how to create a Binder environment, as well as various containers in Docker and Singularity.

Difficulty level: Beginner

Duration: 1:16:04

Speaker: : Erin Dickie and Sejal Patel

This is a tutorial on how to simulate neuronal spiking in brain microcircuit models, as well as how to analyze, plot, and visualize the corresponding data.

Difficulty level: Intermediate

Duration: 1:39:50

Speaker: : Frank Mazza

In this third and final hands-on tutorial from the Research Workflows for Collaborative Neuroscience workshop, you will learn about workflow orchestration using open source tools like DataJoint and Flyte.

Difficulty level: Intermediate

Duration: 22:36

Speaker: : Daniel Xenes

In this hands-on session, you will learn how to explore and work with DataLad datasets, containers, and structures using Jupyter notebooks.

Difficulty level: Beginner

Duration: 58:05

Speaker: : Michał Szczepanik

This lesson contains practical exercises which accompanies the first few lessons of the Neuroscience for Machine Learners (Neuro4ML) course.

Difficulty level: Intermediate

Duration: 5:58

Speaker: : Dan Goodman

This lesson introduces some practical exercises which accompany the Synapses and Networks portion of this Neuroscience for Machine Learners course.

Difficulty level: Intermediate

Duration: 3:51

Speaker: : Dan Goodman

In this lesson, you will learn how to train spiking neural networks (SNNs) with a surrogate gradient method.

Difficulty level: Intermediate

Duration: 11:23

Speaker: : Dan Goodman

In this lesson, you will learn about one particular aspect of decision making: reaction times. In other words, how long does it take to take a decision based on a stream of information arriving continuously over time?

Difficulty level: Intermediate

Duration: 6:01

Speaker: : Dan Goodman

In this tutorial, you will learn how to use TVB-NEST toolbox on your local computer.

Difficulty level: Beginner

Duration: 2:16

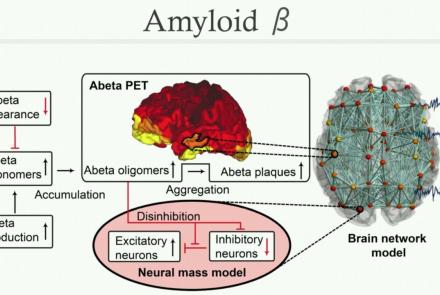

This tutorial provides instruction on how to perform multi-scale simulation of Alzheimer's disease on The Virtual Brain Simulation Platform.

Difficulty level: Beginner

Duration: 29:08

This tutorial provides instruction on how to simulate brain tumors with TVB (reproducing publication: Marinazzo et al. 2020 Neuroimage). This tutorial comprises a didactic video, jupyter notebooks, and full data set for the construction of virtual brains from patients and health controls.

Difficulty level: Intermediate

Duration: 10:01

The tutorial on modelling strokes in TVB includes a didactic video and jupyter notebooks (reproducing publication: Falcon et al. 2016 eNeuro).

Difficulty level: Intermediate

Duration: 7:43

This lecture covers concepts associated with neural nets, including rotation and squashing, and is a part of the Deep Learning Course at New York University's Center for Data Science (CDS).

Difficulty level: Intermediate

Duration: 1:01:53

Speaker: : Alfredo Canziani

This lecture covers the concept of neural nets training (tools, classification with neural nets, and PyTorch implementation) and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:05:47

Speaker: : Alfredo Canziani

This lecture discusses the concept of natural signals properties and the convolutional nets in practice and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:09:12

Speaker: : Alfredo Canziani

This lecture covers the concept of recurrent neural networks: vanilla and gated (LSTM) and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:05:36

Speaker: : Alfredo Canziani

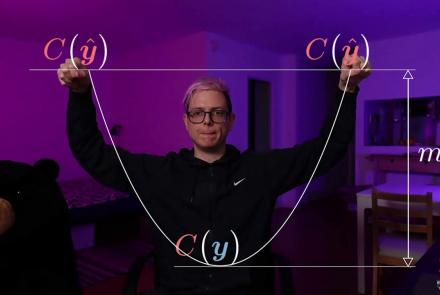

This tutorial covers LV-EBM to target prop to (vanilla, denoising, contractive, variational) autoencoder and is a part of the Advanced Energy-Based Models module of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:00:34

Speaker: : Alfredo Canziani

This tutorial covers the concepts of autoencoders, denoising encoders, and variational autoencoders (VAE) with PyTorch, as well as generative adversarial networks and code. It is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, Energy-Based Models V, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:07:50

Speaker: : Alfredo Canziani

This tutorial covers advanced concept of energy-based models. The lecture is a part of the Associative Memories module of the the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Advanced

Duration: 1:12:00

Speaker: : Alfredo Canziani

Course:

This tutuorial covers the concept of graph convolutional networks and is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 57:33

Speaker: : Alfredo Canziani

Topics

- Notebooks (2)

- Data reuse (1)

- Data sharing (1)

- Machine learning (7)

- Neuroimaging (5)

- Neuromorphic engineering (1)

- Standards and best practices (4)

- Tools (3)

- Psychology (2)

- Workflows (3)

- Animal models (1)

- Brain-hardware interfaces (1)

- Repositories and science gateways (1)

- General neuroscience (2)

- (-) Computational neuroscience (8)

- Statistics (2)

- Computer Science (2)

- Data science (3)