Lesson type

Difficulty level

This lesson describes the fundamentals of genomics, from central dogma to design and implementation of GWAS, to the computation, analysis, and interpretation of polygenic risk scores.

Difficulty level: Intermediate

Duration: 1:28:16

Speaker: : Dan Felsky

Course:

This lesson provides an overview of the database of Genotypes and Phenotypes (dbGaP), which was developed to archive and distribute the data and results from studies that have investigated the interaction of genotype and phenotype in humans.

Difficulty level: Beginner

Duration: 48:22

Speaker: : Michael Feolo

This lesson continues with the second workshop on reproducible science, focusing on additional open source tools for researchers and data scientists, such as the R programming language for data science, as well as associated tools like RStudio and R Markdown. Additionally, users are introduced to Python and iPython notebooks, Google Colab, and are given hands-on tutorials on how to create a Binder environment, as well as various containers in Docker and Singularity.

Difficulty level: Beginner

Duration: 1:16:04

Speaker: : Erin Dickie and Sejal Patel

This talk describes the relevance and power of using brain atlases as part of one's data integration pipeline.

Difficulty level: Beginner

Duration: 15:37

Speaker: : Timo Dickscheid

In this lesson, you will learn how to understand data management plans and why data sharing is important.

Difficulty level: Beginner

Duration: 44:24

Speaker: : Jenny Muilenburg

Course:

This quick visual walkthrough presents the steps required in uploading data into a brainlife project using the graphical user interface (GUI).

Difficulty level: Beginner

Duration: 2:00

Speaker: :

Course:

This short walkthrough documents the steps needed to find a dataset in OpenNeuro, a free and open platform for sharing MRI, MEG, EEG, iEEG, ECoG, ASL, and PET data, and import it directly to a brainlife project.

Difficulty level: Beginner

Duration: 0:35

Speaker: :

Course:

This lesson describes and shows four different ways one may upload their data to brainlife.io.

Difficulty level: Beginner

Duration: 6:35

Speaker: :

Course:

This lecture covers why data sharing and other collaborative practices are important, how these practices are developed, and the challenges involved in their development and implementation.

Difficulty level: Beginner

Duration: 11:41

Speaker: : Joost Wagenaar

This lecture discusses the FAIR principles as they apply to electrophysiology data and metadata, the building blocks for community tools and standards, platforms and grassroots initiatives, and the challenges therein.

Difficulty level: Beginner

Duration: 8:11

Speaker: : Thomas Wachtler

This lecture contains an overview of electrophysiology data reuse within the EBRAINS ecosystem.

Difficulty level: Beginner

Duration: 15:57

Speaker: : Andrew Davison

This lecture contains an overview of the Distributed Archives for Neurophysiology Data Integration (DANDI) archive, its ties to FAIR and open-source, integrations with other programs, and upcoming features.

Difficulty level: Beginner

Duration: 13:34

Speaker: : Yaroslav O. Halchenko

This lesson provides a short overview of the main features of the Canadian Open Neuroscience Platform (CONP) Portal, a web interface that facilitates open science for the neuroscience community by simplifying global access to and sharing of datasets and tools. The Portal internalizes the typical cycle of a research project, beginning with data acquisition, followed by data processing with published tools, and ultimately the publication of results with a link to the original dataset.

Difficulty level: Beginner

Duration: 14:03

Speaker: : Samir Das, Tristan Glatard

The lesson introduces the Brain Imaging Data Structure (BIDS), the community standard for organizing, curating, and sharing neuroimaging and associated data. The session focuses on understanding the BIDS framework, learning its data structure and validation processes.

Difficulty level: Intermediate

Duration: 38:52

Speaker: : Cyril Pernet

This session moves from BIDS basics into analysis workflows, focusing on how to turn raw, BIDS-organized data into derivatives using BIDS Apps and containers for reproducible processing. It compares end-to-end pipelines across fMRI and PET (and notes EEG/MEG), explains typical preprocessing choices, and shows how standardized inputs plus containerized tools (Docker/AppTainer) yield consistent, auditable outputs.

Difficulty level: Intermediate

Duration: 56:03

Speaker: : Martin Nørgaard

The session explains GDPR rules around data sharing for research in Europe, the distinction between law and ethics, and introduces practical solutions for securely sharing sensitive datasets. Researchers have more flexibility than commonly assumed: scientific research is considered a public interest task, so explicit consent for data sharing isn’t legally required, though transparency and informing participants remain ethically important. The talk also introduces publicneuro.eu, a controlled-access platform that enables sharing neuroimaging datasets with open metadata, DOIs, and customizable access restrictions while ensuring GDPR compliance.

Difficulty level: Intermediate

Duration: 31:12

Speaker: : Cyril Pernet

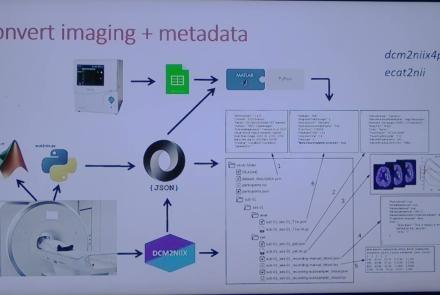

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 9:23

Speaker: : Cyril Pernet

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 41:04

Speaker: : Martin Nørgaard

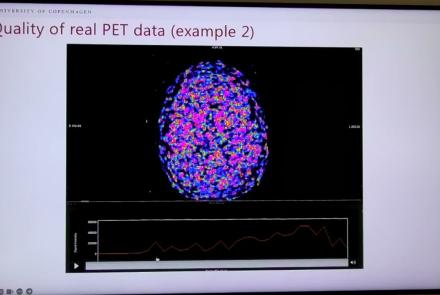

This session dives into practical PET tooling on BIDS data—showing how to run motion correction, register PET↔MRI, extract time–activity curves, and generate standardized PET-BIDS derivatives with clear QC reports. It introduces modular BIDS Apps (head-motion correction, TAC extraction), a full pipeline (PETPrep), and a PET/MRI defacer, with guidance on parameters, outputs, provenance, and why Dockerized containers are the reliable way to run them at scale.

Difficulty level: Intermediate

Duration: 1:05:38

Speaker: : Martin Nørgaard

This session introduces two PET quantification tools—bloodstream for processing arterial blood data and kinfitr for kinetic modeling and quantification—built to work with BIDS/BIDS-derivatives and containers. Bloodstream fuses autosampler and manual measurements (whole blood, plasma, parent fraction) using interpolation or fitted models (incl. hierarchical GAMs) to produce a clean arterial input function (AIF) and whole-blood curves with rich QC reports ready. TAC data (e.g., from PETPrep) and blood (e.g., from bloodstream) can be ingested using kinfitr to run reproducible, GUI-driven analyses: define combined ROIs, calculate weighting factors, estimate blood–tissue delay, choose and chain models (e.g., 2TCM → 1TCM with parameter inheritance), and export parameters/diagnostics. Both are available as Docker apps; workflows emphasize configuration files, reports, and standard outputs to support transparency and reuse.

Difficulty level: Intermediate

Duration: 1:20:56

Speaker: : Granville Matheson

Topics

- Artificial Intelligence (7)

- Philosophy of Science (5)

- Provenance (3)

- protein-protein interactions (1)

- Extracellular signaling (1)

- Animal models (8)

- Assembly 2021 (29)

- Brain-hardware interfaces (14)

- Clinical neuroscience (40)

- International Brain Initiative (2)

- (-) Repositories and science gateways (11)

- Resources (6)

- General neuroscience

(62)

- Neuroscience (11)

- Cognitive Science (7)

- Cell signaling (6)

- Brain networks (11)

- Glia (1)

- Electrophysiology (41)

- Learning and memory (5)

- Neuroanatomy (24)

- Neurobiology (16)

- Neurodegeneration (1)

- Neuroimmunology (1)

- Neural networks (15)

- Neurophysiology (27)

- Neuropharmacology (2)

- Neuronal plasticity (16)

- Synaptic plasticity (4)

- Visual system (12)

- Phenome (1)

- General neuroinformatics

(27)

- Computational neuroscience (279)

- Statistics (7)

- Computer Science (21)

- Genomics (34)

- Data science

(34)

- Open science (61)

- Project management (8)

- Education (4)

- Publishing (4)

- Neuroethics (42)