Similarity Network Fusion (SNF) is a computational method for data integration across various kinds of measurements, aimed at taking advantage of the common as well as complementary information in different data types. This workshop walks participants through running SNF on EEG and genomic data using RStudio.

Difficulty level: Intermediate

Duration: 1:21:38

Speaker: : Dan Felsky

This lesson continues from part one of the lecture Ontologies, Databases, and Standards, diving deeper into a description of ontologies and knowledg graphs.

Difficulty level: Intermediate

Duration: 50:18

Speaker: : Jeff Grethe

This lesson characterizes different types of learning in a neuroscientific and cellular context, and various models employed by researchers to investigate the mechanisms involved.

Difficulty level: Intermediate

Duration: 3:54

Speaker: : Dan Goodman

In this lesson, you will learn about different approaches to modeling learning in neural networks, particularly focusing on system parameters such as firing rates and synaptic weights impact a network.

Difficulty level: Intermediate

Duration: 9:40

Speaker: : Dan Goodman

In this lesson, you will hear about some of the open issues in the field of neuroscience, as well as a discussion about whether neuroscience works, and how can we know?

Difficulty level: Intermediate

Duration: 6:54

Speaker: : Marcus Ghosh

Course:

This lecture and tutorial focuses on measuring human functional brain networks, as well as how to account for inherent variability within those networks.

Difficulty level: Intermediate

Duration: 50:44

Speaker: : Caterina Gratton

Learn how to create a standard extracellular electrophysiology dataset in NWB using Python.

Difficulty level: Intermediate

Duration: 23:10

Speaker: : Ryan Ly

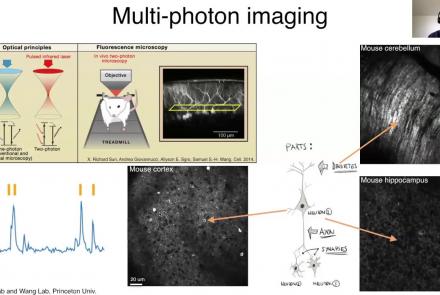

Learn how to create a standard calcium imaging dataset in NWB using Python.

Difficulty level: Intermediate

Duration: 31:04

Speaker: : Ryan Ly

In this tutorial, you will learn how to create a standard intracellular electrophysiology dataset in NWB using Python.

Difficulty level: Intermediate

Duration: 20:23

Speaker: : Pamela Baker

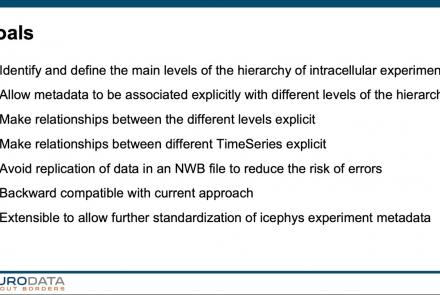

In this tutorial, you will learn how to use the icephys-metadata extension to enter meta-data detailing your experimental paradigm.

Difficulty level: Intermediate

Duration: 27:18

Speaker: : Oliver Ruebel

In this tutorial, users learn how to create a standard extracellular electrophysiology dataset in NWB using MATLAB.

Difficulty level: Intermediate

Duration: 45:46

Speaker: : Ben Dichter

Learn how to create a standard calcium imaging dataset in NWB using MATLAB.

Difficulty level: Intermediate

Duration: 39:10

Speaker: : Ben Dichter

Learn how to create a standard intracellular electrophysiology dataset in NWB.

Difficulty level: Intermediate

Duration: 20:22

Speaker: : Pamela Baker

This lesson gives an overview of the Brainstorm package for analyzing extracellular electrophysiology, including preprocessing, spike sorting, trial alignment, and spectrotemporal decomposition.

Difficulty level: Intermediate

Duration: 47:47

Speaker: : Konstantinos Nasiotis

This lesson provides an overview of the CaImAn package, as well as a demonstration of usage with NWB.

Difficulty level: Intermediate

Duration: 44:37

Speaker: : Andrea Giovannucci

This lesson gives an overview of the SpikeInterface package, including demonstration of data loading, preprocessing, spike sorting, and comparison of spike sorters.

Difficulty level: Intermediate

Duration: 1:10:28

Speaker: : Alessio Buccino

In this lesson, users will learn about the NWBWidgets package, including coverage of different data types, and information for building custom widgets within this framework.

Difficulty level: Intermediate

Duration: 47:15

Speaker: : Ben Dichter

This lecture gives an overview of how to prepare and preprocess neuroimaging (EEG/MEG) data for use in TVB.

Difficulty level: Intermediate

Duration: 1:40:52

Speaker: : Paul Triebkorn

This is the Introductory Module to the Deep Learning Course at CDS, a course that covered the latest techniques in deep learning and representation learning, focusing on supervised and unsupervised deep learning, embedding methods, metric learning, convolutional and recurrent nets, with applications to computer vision, natural language understanding, and speech recognition.

Difficulty level: Intermediate

Duration: 50:17

Speaker: : Yann LeCun and Alfredo Canziani

This module covers the concepts of gradient descent and the backpropagation algorithm and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:51:03

Speaker: : Yann LeCun

Topics

- Artificial Intelligence (1)

- Provenance (1)

- EBRAINS RI (6)

- Animal models (1)

- Brain-hardware interfaces (1)

- Clinical neuroscience (20)

- General neuroscience (13)

- General neuroinformatics (1)

- Computational neuroscience (42)

- Statistics (5)

- (-) Computer Science (2)

- (-) Genomics (7)

- Data science (8)

- Open science (3)

- Project management (1)

- Neuroethics (3)