Course:

This lesson discusses FAIR principles and methods currently in development for assessing FAIRness.

Difficulty level: Beginner

Duration:

Speaker: : Michel Dumontier

Course:

This lecture presents an overview of functional brain parcellations, as well as a set of tutorials on bootstrap agregation of stable clusters (BASC) for fMRI brain parcellation.

Difficulty level: Advanced

Duration: 50:28

Speaker: : Pierre Bellec

This tutorial walks participants through the application of dynamic causal modelling (DCM) to fMRI data using MATLAB. Participants are also shown various forms of DCM, how to generate and specify different models, and how to fit them to simulated neural and BOLD data.

This lesson corresponds to slides 158-187 of the PDF below.

Difficulty level: Advanced

Duration: 1:22:10

Speaker: : Peter Bedford, Povilas Karvelis

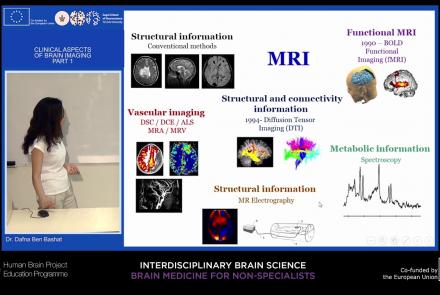

This lecture will provide an overview of neuroimaging techniques and their clinical applications.

Difficulty level: Beginner

Duration: 45:29

Speaker: : Dafna Ben Bashat

Course:

This lecture covers the needs and challenges involved in creating a FAIR ecosystem for neuroimaging research.

Difficulty level: Beginner

Duration: 12:26

Speaker: : Camille Maumet

This lecture covers the NIDM data format within BIDS to make your datasets more searchable, and how to optimize your dataset searches.

Difficulty level: Beginner

Duration: 12:33

Speaker: : David Keator

This lecture covers the processes, benefits, and challenges involved in designing, collecting, and sharing FAIR neuroscience datasets.

Difficulty level: Beginner

Duration: 11:35

Speaker: : Julie Boyle & Valentina Borghesani

This lecture covers positron emission tomography (PET) imaging and the Brain Imaging Data Structure (BIDS), and how they work together within the PET-BIDS standard to make neuroscience more open and FAIR.

Difficulty level: Beginner

Duration: 12:06

Speaker: : Melanie Ganz

This lecture covers the benefits and difficulties involved when re-using open datasets, and how metadata is important to the process.

Difficulty level: Beginner

Duration: 11:20

Speaker: : Elizabeth DuPre

This lecture provides guidance on the ethical considerations the clinical neuroimaging community faces when applying the FAIR principles to their research.

Difficulty level: Beginner

Duration: 13:11

Speaker: : Gustav Nilsonne

This lecture covers advanced concepts of energy-based models. The lecture is a part of the Advanced Energy-Based Models module of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:54:22

Speaker: : Yann LeCun

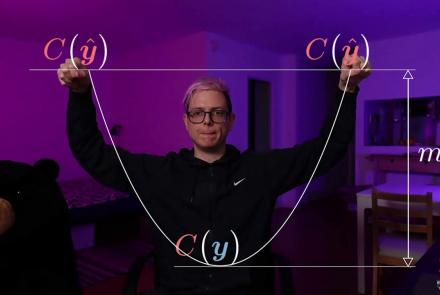

This lecture covers advanced concept of energy based models. The lecture is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Beginner

Duration: 56:41

Speaker: : Alfredo Canziani

This lecture covers advanced concepts of energy-based models. The lecture is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:54:43

Speaker: : Yann LeCun

This tutorial covers LV-EBM to target prop to (vanilla, denoising, contractive, variational) autoencoder and is a part of the Advanced Energy-Based Models module of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:00:34

Speaker: : Alfredo Canziani

This lecture covers advanced concepts of energy-based models. The lecture is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 2:00:28

Speaker: : Yann LeCun

This tutorial covers the concepts of autoencoders, denoising encoders, and variational autoencoders (VAE) with PyTorch, as well as generative adversarial networks and code. It is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, Energy-Based Models V, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:07:50

Speaker: : Alfredo Canziani

This lecture covers advanced concepts of energy-based models. The lecture is a part of the Associative Memories module of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, Energy-Based Models V, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 2:00:28

Speaker: : Yann LeCun

This tutorial covers advanced concept of energy-based models. The lecture is a part of the Associative Memories module of the the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Advanced

Duration: 1:12:00

Speaker: : Alfredo Canziani

Course:

This lecture provides an introduction to the problem of speech recognition using neural models, emphasizing the CTC loss for training and inference when input and output sequences are of different lengths. It also covers the concept of beam search for use during inference, and how that procedure may be modeled at training time using a Graph Transformer Network. It is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:55:03

Speaker: : Awni Hannun

Course:

This lecture covers the concepts of the architecture and convolution of traditional convolutional neural networks, the characteristics of graph and graph convolution, and spectral graph convolutional neural networks and how to perform spectral convolution, as well as the complete spectrum of Graph Convolutional Networks (GCNs), starting with the implementation of Spectral Convolution through Spectral Networks. It then provides insights on applicability of the other convolutional definition of Template Matching to graphs, leading to Spatial networks. This lecture is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 2:00:22

Speaker: : Xavier Bresson

Topics

- Philosophy of Science (5)

- Artificial Intelligence (4)

- BIDS (3)

- Neurodata Without Borders (2)

- NIDM (1)

- Animal models (2)

- Assembly 2021 (26)

- Brain-hardware interfaces (1)

- Clinical neuroscience (10)

- International Brain Initiative (2)

- Repositories and science gateways (5)

- Resources (6)

- General neuroscience

(5)

- General neuroinformatics (12)

- Computational neuroscience (49)

- Statistics (1)

- Computer Science (3)

- (-) Genomics (1)

- Data science (14)

- Open science (10)

- Project management (3)

- Education (1)

- Neuroethics (6)