Lesson type

Difficulty level

This lightning talk describes an automated pipline for positron emission tomography (PET) data.

Difficulty level: Intermediate

Duration: 7:27

Speaker: : Soodeh Moallemian

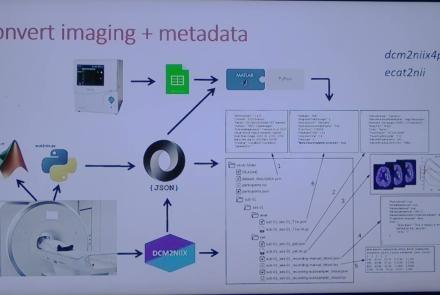

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 9:23

Speaker: : Cyril Pernet

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 41:04

Speaker: : Martin Nørgaard

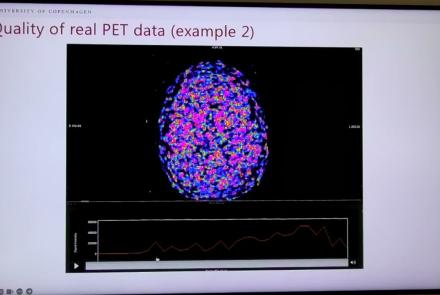

This session dives into practical PET tooling on BIDS data—showing how to run motion correction, register PET↔MRI, extract time–activity curves, and generate standardized PET-BIDS derivatives with clear QC reports. It introduces modular BIDS Apps (head-motion correction, TAC extraction), a full pipeline (PETPrep), and a PET/MRI defacer, with guidance on parameters, outputs, provenance, and why Dockerized containers are the reliable way to run them at scale.

Difficulty level: Intermediate

Duration: 1:05:38

Speaker: : Martin Nørgaard

This session introduces two PET quantification tools—bloodstream for processing arterial blood data and kinfitr for kinetic modeling and quantification—built to work with BIDS/BIDS-derivatives and containers. Bloodstream fuses autosampler and manual measurements (whole blood, plasma, parent fraction) using interpolation or fitted models (incl. hierarchical GAMs) to produce a clean arterial input function (AIF) and whole-blood curves with rich QC reports ready. TAC data (e.g., from PETPrep) and blood (e.g., from bloodstream) can be ingested using kinfitr to run reproducible, GUI-driven analyses: define combined ROIs, calculate weighting factors, estimate blood–tissue delay, choose and chain models (e.g., 2TCM → 1TCM with parameter inheritance), and export parameters/diagnostics. Both are available as Docker apps; workflows emphasize configuration files, reports, and standard outputs to support transparency and reuse.

Difficulty level: Intermediate

Duration: 1:20:56

Speaker: : Granville Matheson

This lecture covers positron emission tomography (PET) imaging and the Brain Imaging Data Structure (BIDS), and how they work together within the PET-BIDS standard to make neuroscience more open and FAIR.

Difficulty level: Beginner

Duration: 12:06

Speaker: : Melanie Ganz

Course:

This module covers many of the types of non-invasive neurotech and neuroimaging devices including electroencephalography (EEG), electromyography (EMG), electroneurography (ENG), magnetoencephalography (MEG), and more.

Difficulty level: Beginner

Duration: 13:36

Speaker: : Harrison Canning

This lesson breaks down the principles of Bayesian inference and how it relates to cognitive processes and functions like learning and perception. It is then explained how cognitive models can be built using Bayesian statistics in order to investigate how our brains interface with their environment.

This lesson corresponds to slides 1-64 in the PDF below.

Difficulty level: Intermediate

Duration: 1:28:14

Speaker: : Andreea Diaconescu

This is a tutorial on designing a Bayesian inference model to map belief trajectories, with emphasis on gaining familiarity with Hierarchical Gaussian Filters (HGFs).

This lesson corresponds to slides 65-90 of the PDF below.

Difficulty level: Intermediate

Duration: 1:15:04

Speaker: : Daniel Hauke

This tutorial walks participants through the application of dynamic causal modelling (DCM) to fMRI data using MATLAB. Participants are also shown various forms of DCM, how to generate and specify different models, and how to fit them to simulated neural and BOLD data.

This lesson corresponds to slides 158-187 of the PDF below.

Difficulty level: Advanced

Duration: 1:22:10

Speaker: : Peter Bedford, Povilas Karvelis

Course:

This tutorial introduces pipelines and methods to compute brain connectomes from fMRI data. With corresponding code and repositories, participants can follow along and learn how to programmatically preprocess, curate, and analyze functional and structural brain data to produce connectivity matrices.

Difficulty level: Intermediate

Duration: 1:39:04

Speaker: : Erin Dickie and John Griffiths

In this lightning talk, you will learn about BrainGlobe, an initiative which exists to facilitate the development of interoperable Python-based tools for computational neuroanatomy.

Difficulty level: Beginner

Duration: 3:33

Speaker: : Alessandro Felder

In this short talk you will learn about The Neural System Laboratory, which aims to develop and implement new technologies for analysis of brain architecture, connectivity, and brain-wide gene and molecular level organization.

Difficulty level: Beginner

Duration: 8:38

Speaker: : Trygve Leergard

In this lecture, you will learn about current methods, approaches, and challenges to studying human neuroanatomy, particularly through the lense of neuroimaging data such as fMRI and diffusion tensor imaging (DTI).

Difficulty level: Intermediate

Duration: 1:35:14

Speaker: : Matt Glasser

Course:

This video demonstrates each required step for preprocessing T1w anatomical data in brainlife.io.

Difficulty level: Beginner

Duration: 3:28

Speaker: :

This lesson delves into the human nervous system and the immense cellular, connectomic, and functional sophistication therein.

Difficulty level: Intermediate

Duration: 8:41

Speaker: : Marcus Ghosh

This lecture provides an introduction to the principal of anatomical organization of neural systems in the human brain and spinal cord that mediate sensation, integrate signals, and motivate behavior.

Difficulty level: Beginner

Duration: 59:57

Speaker: : Lars Klimaschewski

This lecture focuses on the comprehension of nociception and pain sensation, highlighting how the somatosensory system and different molecular partners are involved in nociception.

Difficulty level: Beginner

Duration: 28:09

Speaker: : Serena Quarta

Course:

From the retina to the superior colliculus, the lateral geniculate nucleus into primary visual cortex and beyond, this lecture gives a tour of the mammalian visual system highlighting the Nobel-prize winning discoveries of Hubel & Wiesel.

Difficulty level: Beginner

Duration: 56:31

Speaker: : Clay Reid

Course:

From Universal Turing Machines to McCulloch-Pitts and Hopfield associative memory networks, this lecture explains what is meant by computation.

Difficulty level: Beginner

Duration: 55:27

Speaker: : Christof Koch

Topics

- Artificial Intelligence (7)

- Philosophy of Science (5)

- Provenance (3)

- protein-protein interactions (1)

- Extracellular signaling (1)

- Animal models (8)

- Assembly 2021 (29)

- Brain-hardware interfaces (14)

- Clinical neuroscience (40)

- International Brain Initiative (2)

- Repositories and science gateways (11)

- Resources (6)

- General neuroscience

(62)

- Neuroscience (11)

- Cognitive Science (7)

- Cell signaling (6)

- Brain networks (11)

- Glia (1)

- Electrophysiology (41)

- Learning and memory (5)

- (-) Neuroanatomy (24)

- Neurobiology (16)

- Neurodegeneration (1)

- Neuroimmunology (1)

- Neural networks (15)

- Neurophysiology (27)

- Neuropharmacology (2)

- Neuronal plasticity (16)

- Synaptic plasticity (4)

- Visual system (12)

- Phenome (1)

- General neuroinformatics

(27)

- Computational neuroscience (279)

- Statistics (7)

- Computer Science (21)

- Genomics (34)

- Data science

(34)

- Open science (61)

- Project management (8)

- Education (4)

- Publishing (4)

- Neuroethics (42)