Lesson type

Difficulty level

Course:

This lesson introduces the EEGLAB toolbox, as well as motivations for its use.

Difficulty level: Beginner

Duration: 15:32

Speaker: : Arnaud Delorme

Course:

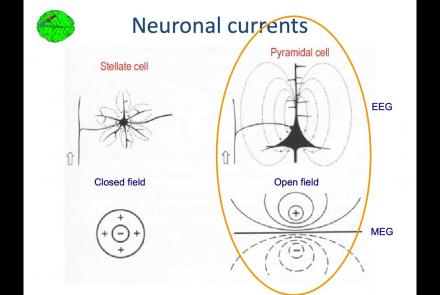

In this lesson, you will learn about the biological activity which generates and is measured by the EEG signal.

Difficulty level: Beginner

Duration: 6:53

Speaker: : Arnaud Delorme

Course:

This lesson goes over the characteristics of EEG signals when analyzed in source space (as opposed to sensor space).

Difficulty level: Beginner

Duration: 10:56

Speaker: : Arnaud Delorme

Course:

This lesson describes the development of EEGLAB as well as to what extent it is used by the research community.

Difficulty level: Beginner

Duration: 6:06

Speaker: : Arnaud Delorme

Course:

This lesson provides instruction as to how to build a processing pipeline in EEGLAB for a single participant.

Difficulty level: Beginner

Duration: 9:20

Speaker: :

Course:

Whereas the previous lesson of this course outlined how to build a processing pipeline for a single participant, this lesson discusses analysis pipelines for multiple participants simultaneously.

Difficulty level: Beginner

Duration: 10:55

Speaker: : Arnaud Delorme

Course:

In addition to outlining the motivations behind preprocessing EEG data in general, this lesson covers the first step in preprocessing data with EEGLAB, importing raw data.

Difficulty level: Beginner

Duration: 8:30

Speaker: : Arnaud Delorme

Course:

Continuing along the EEGLAB preprocessing pipeline, this tutorial walks users through how to import data events as well as EEG channel locations.

Difficulty level: Beginner

Duration: 11:53

Speaker: : Arnaud Delorme

Course:

This tutorial demonstrates how to re-reference and resample raw data in EEGLAB, why such steps are important or useful in the preprocessing pipeline, and how choices made at this step may affect subsequent analyses.

Difficulty level: Beginner

Duration: 11:48

Speaker: : Arnaud Delorme

Course:

In this tutorial, users learn about the various filtering options in EEGLAB, how to inspect channel properties for noisy signals, as well as how to filter out specific components of EEG data (e.g., electrical line noise).

Difficulty level: Beginner

Duration: 10:46

Speaker: : Arnaud Delorme

Course:

This tutorial instructs users how to visually inspect partially pre-processed neuroimaging data in EEGLAB, specifically how to use the data browser to investigate specific channels, epochs, or events for removable artifacts, biological (e.g., eye blinks, muscle movements, heartbeat) or otherwise (e.g., corrupt channel, line noise).

Difficulty level: Beginner

Duration: 5:08

Speaker: : Arnaud Delorme

Course:

This tutorial provides instruction on how to use EEGLAB to further preprocess EEG datasets by identifying and discarding bad channels which, if left unaddressed, can corrupt and confound subsequent analysis steps.

Difficulty level: Beginner

Duration: 13:01

Speaker: : Arnaud Delorme

Course:

Users following this tutorial will learn how to identify and discard bad EEG data segments using the MATLAB toolbox EEGLAB.

Difficulty level: Beginner

Duration: 11:25

Speaker: : Arnaud Delorme

This lecture gives an overview of how to prepare and preprocess neuroimaging (EEG/MEG) data for use in TVB.

Difficulty level: Intermediate

Duration: 1:40:52

Speaker: : Paul Triebkorn

Course:

This module covers many of the types of non-invasive neurotech and neuroimaging devices including electroencephalography (EEG), electromyography (EMG), electroneurography (ENG), magnetoencephalography (MEG), and more.

Difficulty level: Beginner

Duration: 13:36

Speaker: : Harrison Canning

Hierarchical Event Descriptors (HED) fill a major gap in the neuroinformatics standards toolkit, namely the specification of the nature(s) of events and time-limited conditions recorded as having occurred during time series recordings (EEG, MEG, iEEG, fMRI, etc.). Here, the HED Working Group presents an online INCF workshop on the need for, structure of, tools for, and use of HED annotation to prepare neuroimaging time series data for storing, sharing, and advanced analysis.

Difficulty level: Beginner

Duration: 03:37:42

Speaker: :

The lesson introduces the Brain Imaging Data Structure (BIDS), the community standard for organizing, curating, and sharing neuroimaging and associated data. The session focuses on understanding the BIDS framework, learning its data structure and validation processes.

Difficulty level: Intermediate

Duration: 38:52

Speaker: : Cyril Pernet

This session moves from BIDS basics into analysis workflows, focusing on how to turn raw, BIDS-organized data into derivatives using BIDS Apps and containers for reproducible processing. It compares end-to-end pipelines across fMRI and PET (and notes EEG/MEG), explains typical preprocessing choices, and shows how standardized inputs plus containerized tools (Docker/AppTainer) yield consistent, auditable outputs.

Difficulty level: Intermediate

Duration: 56:03

Speaker: : Martin Nørgaard

The session explains GDPR rules around data sharing for research in Europe, the distinction between law and ethics, and introduces practical solutions for securely sharing sensitive datasets. Researchers have more flexibility than commonly assumed: scientific research is considered a public interest task, so explicit consent for data sharing isn’t legally required, though transparency and informing participants remain ethically important. The talk also introduces publicneuro.eu, a controlled-access platform that enables sharing neuroimaging datasets with open metadata, DOIs, and customizable access restrictions while ensuring GDPR compliance.

Difficulty level: Intermediate

Duration: 31:12

Speaker: : Cyril Pernet

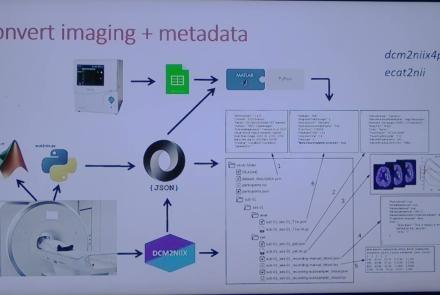

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 9:23

Speaker: : Cyril Pernet

Topics

- Artificial Intelligence (7)

- Philosophy of Science (5)

- (-) Provenance (3)

- protein-protein interactions (1)

- Extracellular signaling (1)

- Animal models (8)

- Assembly 2021 (29)

- Brain-hardware interfaces (14)

- Clinical neuroscience (40)

- International Brain Initiative (2)

- Repositories and science gateways (11)

- Resources (6)

- General neuroscience

(62)

- Neuroscience (11)

- Cognitive Science (7)

- Cell signaling (6)

- Brain networks (11)

- Glia (1)

- Electrophysiology (41)

- Learning and memory (5)

- Neuroanatomy (24)

- Neurobiology (16)

- Neurodegeneration (1)

- Neuroimmunology (1)

- Neural networks (15)

- Neurophysiology (27)

- Neuropharmacology (2)

- Neuronal plasticity (16)

- Synaptic plasticity (4)

- Visual system (12)

- Phenome (1)

- General neuroinformatics

(27)

- Computational neuroscience (279)

- Statistics (7)

- Computer Science (21)

- Genomics (34)

- Data science

(34)

- Open science (61)

- Project management (8)

- Education (4)

- Publishing (4)

- Neuroethics (42)