Lesson type

Difficulty level

Manipulate the default connectome provided with TVB to see how structural lesions effect brain dynamics. In this hands-on session you will insert lesions into the connectome within the TVB graphical user interface (GUI). Afterwards, the modified connectome will be used for simulations and the resulting activity will be analysed using functional connectivity.

Difficulty level: Beginner

Duration: 31:22

Speaker: : Paul Triebkorn

This presentation discusses the impact of data sharing in stroke.

Difficulty level: Intermediate

Duration: 16:33

Speaker: : Valeria Caso

This talks presents an overview of the potential for data federation in stroke research.

Difficulty level: Intermediate

Duration: 21:37

Speaker: : Maurizio A. Leone

Course:

This talk focuses on the EAN Scientific Panel of Stroke, in particular on the aims and roles of the panel.

Difficulty level: Intermediate

Duration: 18:19

Speaker: : Anna Bersano

Course:

This lesson is a general overview of overarching concepts in neuroinformatics research, with a particular focus on clinical approaches to defining, measuring, studying, diagnosing, and treating various brain disorders. Also described are the complex, multi-level nature of brain disorders and the data associated with them, from genes and individual cells up to cortical microcircuits and whole-brain network dynamics. Given the heterogeneity of brain disorders and their underlying mechanisms, this lesson lays out a case for multiscale neuroscience data integration.

Difficulty level: Intermediate

Duration: 1:09:33

Speaker: : Sean Hill

Course:

In this tutorial on simulating whole-brain activity using Python, participants can follow along using corresponding code and repositories, learning the basics of neural oscillatory dynamics, evoked responses and EEG signals, ultimately leading to the design of a network model of whole-brain anatomical connectivity.

Difficulty level: Intermediate

Duration: 1:16:10

Speaker: : John Griffiths

This lesson breaks down the principles of Bayesian inference and how it relates to cognitive processes and functions like learning and perception. It is then explained how cognitive models can be built using Bayesian statistics in order to investigate how our brains interface with their environment.

This lesson corresponds to slides 1-64 in the PDF below.

Difficulty level: Intermediate

Duration: 1:28:14

Speaker: : Andreea Diaconescu

This talk gives a brief overview of current efforts to collect and share the Brain Reference Architecture (BRA) data involved in the construction of a whole-brain architecture that assigns functions to major brain organs.

Difficulty level: Beginner

Duration: 4:02

Speaker: : Yoshimasa Tawatsuj

This brief talk discusses the idea that music, as a naturalistic stimulus, offers a window into higher cognition and various levels of neural architecture.

Difficulty level: Beginner

Duration: 4:04

Speaker: : Sarah Faber

In this short talk you will learn about The Neural System Laboratory, which aims to develop and implement new technologies for analysis of brain architecture, connectivity, and brain-wide gene and molecular level organization.

Difficulty level: Beginner

Duration: 8:38

Speaker: : Trygve Leergard

Whereas the previous two lessons described the biophysical and signalling properties of individual neurons, this lesson describes properties of those units when part of larger networks.

Difficulty level: Intermediate

Duration: 6:00

Speaker: : Marcus Ghosh

This lesson goes over some examples of how machine learners and computational neuroscientists go about designing and building neural network models inspired by biological brain systems.

Difficulty level: Intermediate

Duration: 12:52

Speaker: : Dan Goodman

Course:

This lecture and tutorial focuses on measuring human functional brain networks, as well as how to account for inherent variability within those networks.

Difficulty level: Intermediate

Duration: 50:44

Speaker: : Caterina Gratton

Course:

This lecture presents an overview of functional brain parcellations, as well as a set of tutorials on bootstrap agregation of stable clusters (BASC) for fMRI brain parcellation.

Difficulty level: Advanced

Duration: 50:28

Speaker: : Pierre Bellec

Course:

Neuronify is an educational tool meant to create intuition for how neurons and neural networks behave. You can use it to combine neurons with different connections, just like the ones we have in our brain, and explore how changes on single cells lead to behavioral changes in important networks. Neuronify is based on an integrate-and-fire model of neurons. This is one of the simplest models of neurons that exist. It focuses on the spike timing of a neuron and ignores the details of the action potential dynamics. These neurons are modeled as simple RC circuits. When the membrane potential is above a certain threshold, a spike is generated and the voltage is reset to its resting potential. This spike then signals other neurons through its synapses.

Neuronify aims to provide a low entry point to simulation-based neuroscience.

Difficulty level: Beginner

Duration: 01:25

Speaker: : Neuronify

This lesson provides an overview of the current status in the field of neuroscientific ontologies, presenting examples of data organization and standards, particularly from neuroimaging and electrophysiology.

Difficulty level: Intermediate

Duration: 33:41

Speaker: : Yaroslav O. Halchenko

The lesson introduces the Brain Imaging Data Structure (BIDS), the community standard for organizing, curating, and sharing neuroimaging and associated data. The session focuses on understanding the BIDS framework, learning its data structure and validation processes.

Difficulty level: Intermediate

Duration: 38:52

Speaker: : Cyril Pernet

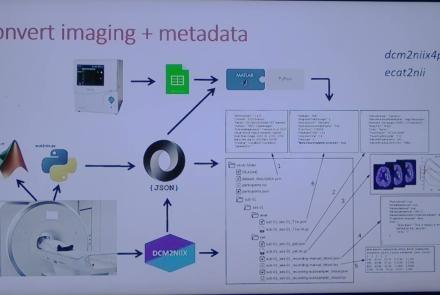

This session moves from BIDS basics into analysis workflows, focusing on how to turn raw, BIDS-organized data into derivatives using BIDS Apps and containers for reproducible processing. It compares end-to-end pipelines across fMRI and PET (and notes EEG/MEG), explains typical preprocessing choices, and shows how standardized inputs plus containerized tools (Docker/AppTainer) yield consistent, auditable outputs.

Difficulty level: Intermediate

Duration: 56:03

Speaker: : Martin Nørgaard

The session explains GDPR rules around data sharing for research in Europe, the distinction between law and ethics, and introduces practical solutions for securely sharing sensitive datasets. Researchers have more flexibility than commonly assumed: scientific research is considered a public interest task, so explicit consent for data sharing isn’t legally required, though transparency and informing participants remain ethically important. The talk also introduces publicneuro.eu, a controlled-access platform that enables sharing neuroimaging datasets with open metadata, DOIs, and customizable access restrictions while ensuring GDPR compliance.

Difficulty level: Intermediate

Duration: 31:12

Speaker: : Cyril Pernet

This session introduces the PET-to-BIDS (PET2BIDS) library, a toolkit designed to simplify the conversion and preparation of PET imaging datasets into BIDS-compliant formats. It supports multiple data types and formats (e.g., DICOM, ECAT7+, nifti, JSON), integrates seamlessly with Excel-based metadata, and provides automated routines for metadata updates, blood data conversion, and JSON synchronization. PET2BIDS improves human readability by mapping complex reconstruction names into standardized, descriptive labels and offers extensive documentation, examples, and video tutorials to make adoption easier for researchers.

Difficulty level: Intermediate

Duration: 9:23

Speaker: : Cyril Pernet

Topics

- Artificial Intelligence (7)

- Philosophy of Science (5)

- Provenance (3)

- protein-protein interactions (1)

- Extracellular signaling (1)

- Animal models (8)

- Assembly 2021 (29)

- Brain-hardware interfaces (14)

- Clinical neuroscience (40)

- International Brain Initiative (2)

- Repositories and science gateways (11)

- Resources (6)

- General neuroscience

(62)

- Neuroscience (11)

- Cognitive Science (7)

- Cell signaling (6)

- (-) Brain networks (11)

- Glia (1)

- Electrophysiology (41)

- Learning and memory (5)

- Neuroanatomy (24)

- Neurobiology (16)

- Neurodegeneration (1)

- Neuroimmunology (1)

- Neural networks (15)

- Neurophysiology (27)

- Neuropharmacology (2)

- Neuronal plasticity (16)

- Synaptic plasticity (4)

- Visual system (12)

- Phenome (1)

- General neuroinformatics

(27)

- Computational neuroscience (279)

- Statistics (7)

- Computer Science (21)

- Genomics (34)

- Data science

(34)

- Open science (61)

- Project management (8)

- Education (4)

- Publishing (4)

- Neuroethics (42)