Lesson type

Difficulty level

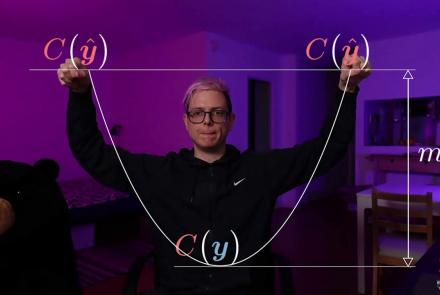

This tutorial covers the concepts of autoencoders, denoising encoders, and variational autoencoders (VAE) with PyTorch, as well as generative adversarial networks and code. It is a part of the Advanced energy based models modules of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, Energy-Based Models V, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:07:50

Speaker: : Alfredo Canziani

This lecture covers advanced concepts of energy-based models. The lecture is a part of the Associative Memories module of the the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this course include: Energy-Based Models I, Energy-Based Models II, Energy-Based Models III, Energy-Based Models IV, Energy-Based Models V, and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 2:00:28

Speaker: : Yann LeCun

This tutorial covers advanced concept of energy-based models. The lecture is a part of the Associative Memories module of the the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Advanced

Duration: 1:12:00

Speaker: : Alfredo Canziani

Course:

This lecture provides an introduction to the problem of speech recognition using neural models, emphasizing the CTC loss for training and inference when input and output sequences are of different lengths. It also covers the concept of beam search for use during inference, and how that procedure may be modeled at training time using a Graph Transformer Network. It is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:55:03

Speaker: : Awni Hannun

Course:

This lecture covers the concepts of the architecture and convolution of traditional convolutional neural networks, the characteristics of graph and graph convolution, and spectral graph convolutional neural networks and how to perform spectral convolution, as well as the complete spectrum of Graph Convolutional Networks (GCNs), starting with the implementation of Spectral Convolution through Spectral Networks. It then provides insights on applicability of the other convolutional definition of Template Matching to graphs, leading to Spatial networks. This lecture is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 2:00:22

Speaker: : Xavier Bresson

Course:

This tutuorial covers the concept of graph convolutional networks and is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Modules 1 - 5 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 57:33

Speaker: : Alfredo Canziani

Course:

This lecture covers the concept of model predictive control and is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Models 1-6 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:10:22

Speaker: : Alfredo Canziani

Course:

This lecture covers the concepts of emulation of kinematics from observations and training a policy. It is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Models 1-6 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:01:21

Speaker: : Alfredo Canziani

Course:

This lecture covers the concept of predictive policy learning under uncertainty and is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Models 1-6 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:14:44

Speaker: : Alfredo Canziani

Course:

This lecture covers the concepts of gradient descent, stochastic gradient descent, and momentum. It is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Models 1-7 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:29:05

Speaker: : Aaron DeFazio

Course:

This lecture continues on the topic of descent from the previous lesson, Optimization I. This lesson is a part of the Deep Learning Course at NYU's Center for Data Science. Prerequisites for this module include: Models 1-7 of this course and an Introduction to Data Science or a Graduate Level Machine Learning course.

Difficulty level: Advanced

Duration: 1:51:32

Speaker: : Alfredo Canziani

Course:

This lesson gives an introduction to deep learning, with a perspective via inductive biases and emphasis on correctly matching deep learning to the right research questions.

Difficulty level: Beginner

Duration: 01:35:12

Speaker: : Blake Richards

This is a continuation of the talk on the cellular mechanisms of neuronal communication, this time at the level of brain microcircuits and associated global signals like those measureable by electroencephalography (EEG). This lecture also discusses EEG biomarkers in mental health disorders, and how those cortical signatures may be simulated digitally.

Difficulty level: Intermediate

Duration: 1:11:04

Speaker: : Etay Hay

Course:

The state of the field regarding the diagnosis and treatment of major depressive disorder (MDD) is discussed. Current challenges and opportunities facing the research and clinical communities are outlined, including appropriate quantitative and qualitative analyses of the heterogeneity of biological, social, and psychiatric factors which may contribute to MDD.

Difficulty level: Beginner

Duration: 1:29:28

Speaker: : Brett Jones, Victor Tang

Course:

This lesson delves into the opportunities and challenges of telepsychiatry. While novel digital approaches to clinical research and care have the potential to improve and accelerate patient outcomes, researchers and care providers must consider new population factors, such as digital disparity.

Difficulty level: Beginner

Duration: 1:20:28

Speaker: : Abhi Pratap

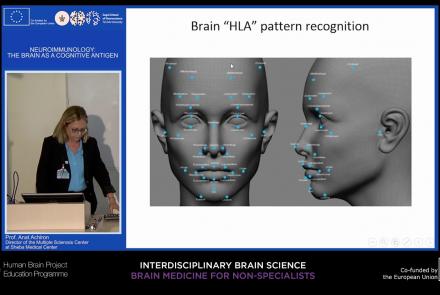

This lecture focuses on how the immune system can target and attack the nervous system to produce autoimmune responses that may result in diseases such as multiple sclerosis, neuromyelitis, and lupus cerebritis manifested by motor, sensory, and cognitive impairments. Despite the fact that the brain is an immune-privileged site, autoreactive lymphocytes producing proinflammatory cytokines can cause active brain inflammation, leading to myelin and axonal loss.

Difficulty level: Beginner

Duration: 37:36

Speaker: : Anat Achiron

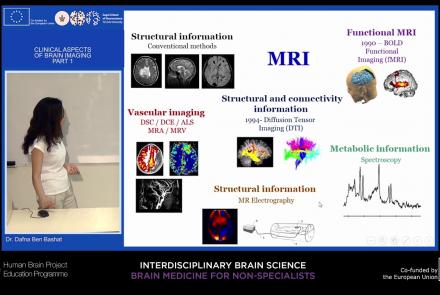

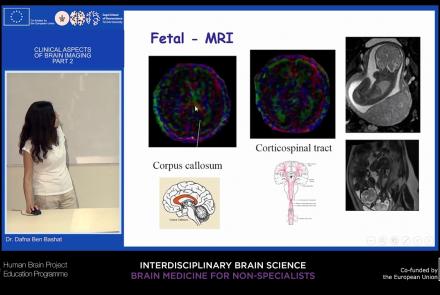

This lecture will provide an overview of neuroimaging techniques and their clinical applications.

Difficulty level: Beginner

Duration: 45:29

Speaker: : Dafna Ben Bashat

This lecture picks up from the previous lesson, providing an overview of neuroimaging techniques and their clinical applications.

Difficulty level: Beginner

Duration: 41:00

Speaker: : Dafna Ben Bashat

This lesson discusses both state-of-the-art detection and prevention schema in working with neurodegenerative diseases.

Difficulty level: Beginner

Duration: 1:02:29

Speaker: : Nir Giladi

This lecture provides an overview of depression (epidemiology and course of the disorder), clinical presentation, somatic co-morbidity, and treatment options.

Difficulty level: Beginner

Duration: 37:51

Speaker: : Barbara Sperner-Unterweger

Topics

- Philosophy of Science (5)

- Artificial Intelligence (4)

- Animal models (2)

- Assembly 2021 (26)

- Brain-hardware interfaces (2)

- (-) Clinical neuroscience (11)

- International Brain Initiative (2)

- Repositories and science gateways (5)

- Resources (6)

- General neuroscience

(13)

- Cognitive Science (7)

- Cell signaling (3)

- Brain networks (5)

- Glia (1)

- Electrophysiology (8)

- Learning and memory (4)

- Neuroanatomy (3)

- Neurobiology (11)

- Neurodegeneration (1)

- Neuroimmunology (1)

- Neural networks (11)

- Neurophysiology (4)

- Neuropharmacology (1)

- Neuronal plasticity (1)

- Synaptic plasticity (1)

- General neuroinformatics (12)

- Computational neuroscience (55)

- Statistics (3)

- Computer Science (4)

- Genomics (4)

- Data science (6)

- Open science (12)

- Project management (3)

- Education (1)

- Neuroethics (6)