In this lesson you will learn how machine learners and neuroscientists construct abstract computational models based on various neurophysiological signalling properties.

Difficulty level: Intermediate

Duration: 10:52

Speaker: : Dan Goodman

This lesson goes over the basic mechanisms of neural synapses, the space between neurons where signals may be transmitted.

Difficulty level: Intermediate

Duration: 7:03

Speaker: : Marcus Ghosh

While the previous lesson in the Neuro4ML course dealt with the mechanisms involved in individual synapses, this lesson discusses how synapses and their neurons' firing patterns may change over time.

Difficulty level: Intermediate

Duration: 4:48

Speaker: : Marcus Ghosh

Whereas the previous two lessons described the biophysical and signalling properties of individual neurons, this lesson describes properties of those units when part of larger networks.

Difficulty level: Intermediate

Duration: 6:00

Speaker: : Marcus Ghosh

This lesson goes over some examples of how machine learners and computational neuroscientists go about designing and building neural network models inspired by biological brain systems.

Difficulty level: Intermediate

Duration: 12:52

Speaker: : Dan Goodman

This lesson delves into the human nervous system and the immense cellular, connectomic, and functional sophistication therein.

Difficulty level: Intermediate

Duration: 8:41

Speaker: : Marcus Ghosh

This lesson characterizes different types of learning in a neuroscientific and cellular context, and various models employed by researchers to investigate the mechanisms involved.

Difficulty level: Intermediate

Duration: 3:54

Speaker: : Dan Goodman

In this lesson, you will learn about different approaches to modeling learning in neural networks, particularly focusing on system parameters such as firing rates and synaptic weights impact a network.

Difficulty level: Intermediate

Duration: 9:40

Speaker: : Dan Goodman

This lesson describes spike timing-dependent plasticity (STDP), a biological process that adjusts the strength of connections between neurons in the brain, and how one can implement or mimic this process in a computational model. You will also find links for practical exercises at the bottom of this page.

Difficulty level: Intermediate

Duration: 12:50

Speaker: : Dan Goodman

In this lesson, you will learn about some of the many methods to train spiking neural networks (SNNs) with either no attempt to use gradients, or only use gradients in a limited or constrained way.

Difficulty level: Intermediate

Duration: 5:14

Speaker: : Dan Goodman

In this lesson, you will learn how to train spiking neural networks (SNNs) with a surrogate gradient method.

Difficulty level: Intermediate

Duration: 11:23

Speaker: : Dan Goodman

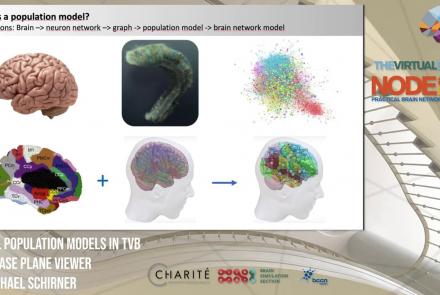

This lesson introduces population models and the phase plane, and is part of the The Virtual Brain (TVB) Node 10 Series, a 4-day workshop dedicated to learning about the full brain simulation platform TVB, as well as brain imaging, brain simulation, personalised brain models, and TVB use cases.

Difficulty level: Intermediate

Duration: 1:10:41

Speaker: : Michael Schirner

This lesson introduces TVB-multi-scale extensions and other TVB tools which facilitate modeling and analyses of multi-scale data.

Difficulty level: Intermediate

Duration: 36:10

Speaker: : Dionysios Perdikis

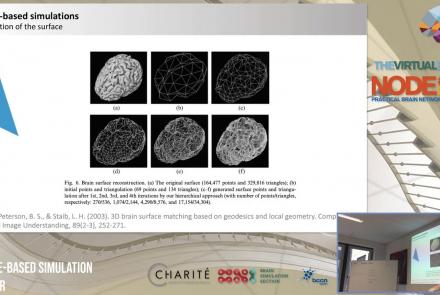

This lecture delves into cortical (i.e., surface-based) brain simulations, as well as subcortical (i.e., deep brain) stimulations, covering the definitions, motivations, and implementations of both.

Difficulty level: Intermediate

Duration: 39:05

Speaker: : Jil Meier

This lecture provides an introduction to entropy in general, and multi-scale entropy (MSE) in particular, highlighting the potential clinical applications of the latter.

Difficulty level: Intermediate

Duration: 39:05

Speaker: : Jil Meier

This lecture gives an overview of how to prepare and preprocess neuroimaging (EEG/MEG) data for use in TVB.

Difficulty level: Intermediate

Duration: 1:40:52

Speaker: : Paul Triebkorn

In this lecture, you will learn about various neuroinformatic resources which allow for 3D reconstruction of brain models.

Difficulty level: Intermediate

Duration: 1:36:57

Speaker: : Michael Schirner

This lesson provides a brief introduction to the Computational Modeling of Neuronal Plasticity.

Difficulty level: Intermediate

Duration: 0:40

Speaker: : Florence I. Kleberg

In this lesson, you will be introducted to a type of neuronal model known as the leaky integrate-and-fire (LIF) model.

Difficulty level: Intermediate

Duration: 1:23

Speaker: : Florence I. Kleberg

This lesson goes over various potential inputs to neuronal synapses, loci of neural communication.

Difficulty level: Intermediate

Duration: 1:20

Speaker: : Florence I. Kleberg

Topics

- Artificial Intelligence (1)

- Provenance (1)

- (-) EBRAINS RI (6)

- Brain-hardware interfaces (1)

- (-) Clinical neuroscience (20)

- General neuroscience

(11)

- General neuroinformatics (1)

- Computational neuroscience (38)

- Statistics (2)

- Computer Science (4)

- Genomics (3)

- Data science (8)

- Open science (3)

- Project management (1)

- Neuroethics (3)