Neurokernal: Emulating the Drosophila Brain on Multiple GPUs

Neurokernal: Emulating the Drosophila Brain on Multiple GPUs

In this lecture, the speaker demonstrates Neurokernel's module interfacing feature by using it to integrate independently developed models of olfactory and vision LPUs based upon experimentally obtained connectivity information.

Topics covered in this lesson

- Whole fly-brain modeling

- Local processing units (LPUs) and graphics processing units (GPUs)

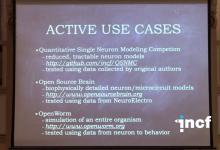

- Open source, collaborative development

External Links

Back to the course