This lesson provides an overview of the current status in the field of neuroscientific ontologies, presenting examples of data organization and standards, particularly from neuroimaging and electrophysiology.

Difficulty level: Intermediate

Duration: 33:41

Speaker: : Yaroslav O. Halchenko

This tutorial provides instruction on how to simulate brain tumors with TVB (reproducing publication: Marinazzo et al. 2020 Neuroimage). This tutorial comprises a didactic video, jupyter notebooks, and full data set for the construction of virtual brains from patients and health controls.

Difficulty level: Intermediate

Duration: 10:01

The tutorial on modelling strokes in TVB includes a didactic video and jupyter notebooks (reproducing publication: Falcon et al. 2016 eNeuro).

Difficulty level: Intermediate

Duration: 7:43

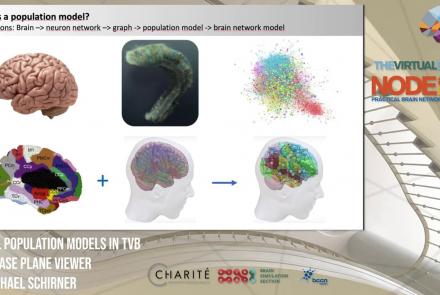

This lesson introduces population models and the phase plane, and is part of the The Virtual Brain (TVB) Node 10 Series, a 4-day workshop dedicated to learning about the full brain simulation platform TVB, as well as brain imaging, brain simulation, personalised brain models, and TVB use cases.

Difficulty level: Intermediate

Duration: 1:10:41

Speaker: : Michael Schirner

In this tutorial, you will learn how to run a typical TVB simulation.

Difficulty level: Intermediate

Duration: 1:29:13

Speaker: : Paul Triebkorn

This lesson introduces TVB-multi-scale extensions and other TVB tools which facilitate modeling and analyses of multi-scale data.

Difficulty level: Intermediate

Duration: 36:10

Speaker: : Dionysios Perdikis

This tutorial introduces The Virtual Mouse Brain (TVMB), walking users through the necessary steps for performing simulation operations on animal brain data.

Difficulty level: Intermediate

Duration: 42:43

Speaker: : Patrik Bey

In this tutorial, you will learn the necessary steps in modeling the brain of one of the most commonly studied animals among non-human primates, the macaque.

Difficulty level: Intermediate

Duration: 1:00:08

Speaker: : Julie Courtiol

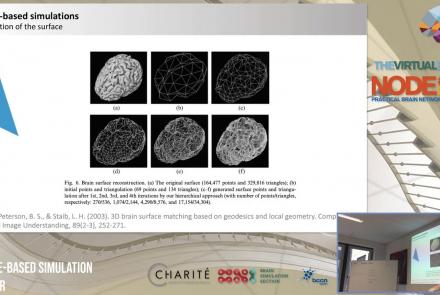

This lecture delves into cortical (i.e., surface-based) brain simulations, as well as subcortical (i.e., deep brain) stimulations, covering the definitions, motivations, and implementations of both.

Difficulty level: Intermediate

Duration: 39:05

Speaker: : Jil Meier

This lecture provides an introduction to entropy in general, and multi-scale entropy (MSE) in particular, highlighting the potential clinical applications of the latter.

Difficulty level: Intermediate

Duration: 39:05

Speaker: : Jil Meier

This lecture gives an overview of how to prepare and preprocess neuroimaging (EEG/MEG) data for use in TVB.

Difficulty level: Intermediate

Duration: 1:40:52

Speaker: : Paul Triebkorn

In this lecture, you will learn about various neuroinformatic resources which allow for 3D reconstruction of brain models.

Difficulty level: Intermediate

Duration: 1:36:57

Speaker: : Michael Schirner

This is the Introductory Module to the Deep Learning Course at CDS, a course that covered the latest techniques in deep learning and representation learning, focusing on supervised and unsupervised deep learning, embedding methods, metric learning, convolutional and recurrent nets, with applications to computer vision, natural language understanding, and speech recognition.

Difficulty level: Intermediate

Duration: 50:17

Speaker: : Yann LeCun and Alfredo Canziani

This module covers the concepts of gradient descent and the backpropagation algorithm and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:51:03

Speaker: : Yann LeCun

This lecture covers concepts associated with neural nets, including rotation and squashing, and is a part of the Deep Learning Course at New York University's Center for Data Science (CDS).

Difficulty level: Intermediate

Duration: 1:01:53

Speaker: : Alfredo Canziani

This lesson provides a detailed description of some of the modules and architectures involved in the development of neural networks.

Difficulty level: Intermediate

Duration: 1:42:26

Speaker: : Yann LeCun and Alfredo Canziani

This lecture covers the concept of neural nets training (tools, classification with neural nets, and PyTorch implementation) and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:05:47

Speaker: : Alfredo Canziani

This lecture covers the concept of parameter sharing: recurrent and convolutional nets and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:59:47

Speaker: : Yann LeCun and Alfredo Canziani

This lecture covers the concept of convolutional nets in practice and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 51:40

Speaker: : Yann LeCun

This lecture discusses the concept of natural signals properties and the convolutional nets in practice and is a part of the Deep Learning Course at NYU's Center for Data Science.

Difficulty level: Intermediate

Duration: 1:09:12

Speaker: : Alfredo Canziani

Topics

- Artificial Intelligence (1)

- Notebooks (1)

- Provenance (1)

- DANDI archive (1)

- EBRAINS RI (6)

- Animal models (2)

- Brain-hardware interfaces (1)

- Clinical neuroscience (23)

- General neuroscience

(17)

- General neuroinformatics

(1)

- Computational neuroscience (81)

- Statistics (5)

- Computer Science (5)

- Genomics (8)

- (-)

Data science

(10)

- Open science (5)

- Project management (1)

- (-) Education (1)

- Neuroethics (5)