Manipulate the default connectome provided with TVB to see how structural lesions effect brain dynamics. In this hands-on session you will insert lesions into the connectome within the TVB graphical user interface (GUI). Afterwards, the modified connectome will be used for simulations and the resulting activity will be analysed using functional connectivity.

Difficulty level: Beginner

Duration: 31:22

Speaker: : Paul Triebkorn

Course:

This lesson introduces the EEGLAB toolbox, as well as motivations for its use.

Difficulty level: Beginner

Duration: 15:32

Speaker: : Arnaud Delorme

Course:

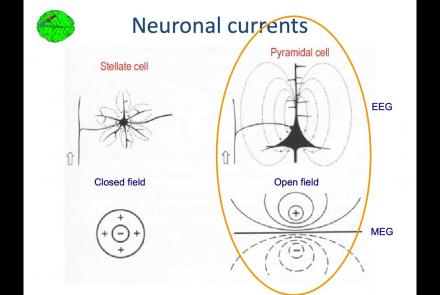

In this lesson, you will learn about the biological activity which generates and is measured by the EEG signal.

Difficulty level: Beginner

Duration: 6:53

Speaker: : Arnaud Delorme

Course:

This lesson goes over the characteristics of EEG signals when analyzed in source space (as opposed to sensor space).

Difficulty level: Beginner

Duration: 10:56

Speaker: : Arnaud Delorme

Course:

This lesson describes the development of EEGLAB as well as to what extent it is used by the research community.

Difficulty level: Beginner

Duration: 6:06

Speaker: : Arnaud Delorme

Course:

This lesson provides instruction as to how to build a processing pipeline in EEGLAB for a single participant.

Difficulty level: Beginner

Duration: 9:20

Speaker: :

Course:

Whereas the previous lesson of this course outlined how to build a processing pipeline for a single participant, this lesson discusses analysis pipelines for multiple participants simultaneously.

Difficulty level: Beginner

Duration: 10:55

Speaker: : Arnaud Delorme

Course:

In addition to outlining the motivations behind preprocessing EEG data in general, this lesson covers the first step in preprocessing data with EEGLAB, importing raw data.

Difficulty level: Beginner

Duration: 8:30

Speaker: : Arnaud Delorme

Course:

Continuing along the EEGLAB preprocessing pipeline, this tutorial walks users through how to import data events as well as EEG channel locations.

Difficulty level: Beginner

Duration: 11:53

Speaker: : Arnaud Delorme

Course:

This tutorial instructs users how to visually inspect partially pre-processed neuroimaging data in EEGLAB, specifically how to use the data browser to investigate specific channels, epochs, or events for removable artifacts, biological (e.g., eye blinks, muscle movements, heartbeat) or otherwise (e.g., corrupt channel, line noise).

Difficulty level: Beginner

Duration: 5:08

Speaker: : Arnaud Delorme

Course:

This tutorial provides instruction on how to use EEGLAB to further preprocess EEG datasets by identifying and discarding bad channels which, if left unaddressed, can corrupt and confound subsequent analysis steps.

Difficulty level: Beginner

Duration: 13:01

Speaker: : Arnaud Delorme

Course:

Users following this tutorial will learn how to identify and discard bad EEG data segments using the MATLAB toolbox EEGLAB.

Difficulty level: Beginner

Duration: 11:25

Speaker: : Arnaud Delorme

This lecture gives an overview of how to prepare and preprocess neuroimaging (EEG/MEG) data for use in TVB.

Difficulty level: Intermediate

Duration: 1:40:52

Speaker: : Paul Triebkorn

Course:

Neuronify is an educational tool meant to create intuition for how neurons and neural networks behave. You can use it to combine neurons with different connections, just like the ones we have in our brain, and explore how changes on single cells lead to behavioral changes in important networks. Neuronify is based on an integrate-and-fire model of neurons. This is one of the simplest models of neurons that exist. It focuses on the spike timing of a neuron and ignores the details of the action potential dynamics. These neurons are modeled as simple RC circuits. When the membrane potential is above a certain threshold, a spike is generated and the voltage is reset to its resting potential. This spike then signals other neurons through its synapses.

Neuronify aims to provide a low entry point to simulation-based neuroscience.

Difficulty level: Beginner

Duration: 01:25

Speaker: : Neuronify

Course:

This lesson gives an introduction to the central concepts of machine learning, and how they can be applied in Python using the scikit-learn package.

Difficulty level: Intermediate

Duration: 2:22:28

Speaker: : Jake Vanderplas

This lesson provides an overview of self-supervision as it relates to neural data tasks and the Mine Your Own vieW (MYOW) approach.

Difficulty level: Beginner

Duration: 25:50

Speaker: : Eva Dyer

This lecture provides an introduction to entropy in general, and multi-scale entropy (MSE) in particular, highlighting the potential clinical applications of the latter.

Difficulty level: Intermediate

Duration: 39:05

Speaker: : Jil Meier

This lecture provides an general introduction to epilepsy, as well as why and how TVB can prove useful in building and testing epileptic models.

Difficulty level: Intermediate

Duration: 37:12

Speaker: : Julie Courtiol

Course:

This lecture covers the rationale for developing the DAQCORD, a framework for the design, documentation, and reporting of data curation methods in order to advance the scientific rigour, reproducibility, and analysis of data.

Difficulty level: Intermediate

Duration: 17:08

Speaker: : Ari Ercole

In this session the Medical Informatics Platform (MIP) federated analytics is presented. The current and future analytical tools implemented in the MIP will be detailed along with the constructs, tools, processes, and restrictions that formulate the solution provided. MIP is a platform providing advanced federated analytics for diagnosis and research in clinical neuroscience research. It is targeting clinicians, clinical scientists and clinical data scientists. It is designed to help adopt advanced analytics, explore harmonized medical data of neuroimaging, neurophysiological and medical records as well as research cohort datasets, without transferring original clinical data. It can be perceived as a virtual database that seamlessly presents aggregated data from distributed sources, provides access and analyze imaging and clinical data, securely stored in hospitals, research archives and public databases. It leverages and re-uses decentralized patient data and research cohort datasets, without transferring original data. Integrated statistical analysis tools and machine learning algorithms are exposed over harmonized, federated medical data.

Difficulty level: Intermediate

Duration: 15:05

Speaker: : Giorgos Papanikos

Topics

- Standards and Best Practices (2)

- (-) Machine learning (2)

- Animal models (1)

- Assembly 2021 (6)

- Clinical neuroscience (3)

- Repositories and science gateways (1)

- Resources (3)

- General neuroscience (5)

- Phenome (1)

- General neuroinformatics (1)

- Computational neuroscience (42)

- Statistics (2)

- Computer Science (4)

- Genomics (26)

- Data science (17)

- Open science (24)

- Project management (1)

- Education (2)

- Publishing (1)